August 14, 2023

We hope you enjoy our articles. Please note, we may collect a share of sales or other compensation from the links on this page. Thank you if you use our links, we really appreciate it!

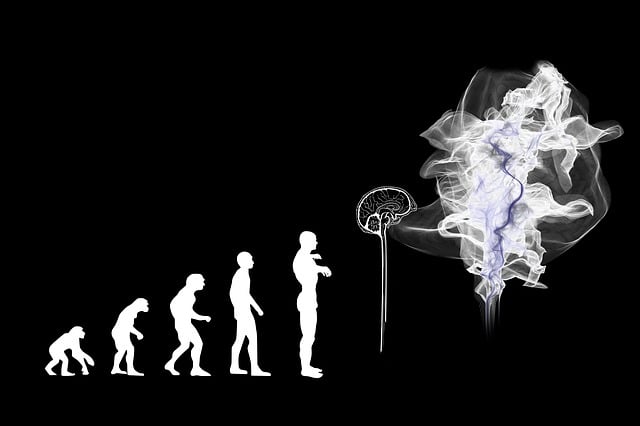

In a recent statement, Tesla co-founder Elon Musk raised concerns about artificial intelligence (AI). That AI in a few years could supersede individual human intelligence is no more a fantasy. Instead of governments of states, it is possible, we the human individuals could be ruled by AI powered machines. Machines gaining intelligence is not something new in the fantasy and sci-fi worlds as there are ample stories showing how machines gain intelligence and make decisions and even get affected by human emotions like love and jealousy. Imagine that it becomes a reality. Imagine there are no more law enforcement officers but metallic machines walking or driving other machines regulating human and world affairs.

What would such a scenario look like? I wish it never happens, but if it ever happens, defying human efforts not to let it happen, what would it look like? Would it be like a terminator situation where AI machines eliminate everything and every being that does not like and suit their ‘thinking’, if at all we categorize their springboard of action as ‘thinking’ and accept that they have a mind like we humans have a mind, and their operationality is similar to that of human mind? We do not know whether the mind or intelligence of machines would be ‘rational’, the mode of mental operation that has been worshipped since the Enlightenment era, and whether they would follow accepted canons of rational knowledge production. Or, their way of operation would defy the way we define rationality, or would it be something not defined, or something beyond our knowledge and understanding of rationality, or something beyond human comprehension.

Such a scenario would be frightening. And one hopes it never happens. Musk argues, and with which I agree, that the superintelligence of machines would not be potent enough, even if it surpasses individual human intelligence, to surpass the collective human intelligence. The collective human mind, the aggregation of best human minds from across the divides of nation and culture and other human made barriers, would be powerful enough to undermine or check the deleterious effects of artificial intelligence, even while accepting its utility in providing comfort to human living and operations.

Hence, the key here, in order to avoid a possible human collapse, is how to develop this collective intelligence – that keeps the human welfare in mind – and apply it to checkmate possible AI menace. First, the nations of the world must come together and develop collaboratively norms and standards to regulate AI. The United Nations Organization can provide a global framework in this context and use its mechanisms in that direction. Already regional organizations like the EU have expressed concerns about harmful effects of AI. Second, individual nation states must self-regulate and develop norms and standards in line with the global framework to regulate AI. Global rules have no value unless individual nation states abide by them. And finally, and I think most importantly, each individual and smaller groups and organizations within each nation state must self-regulate and abide by national and international rules while exploring seemingly endless frontiers of AI.

Can these suggested frameworks be a reality, or the pace of developments in artificial intelligence is so fast that the pace would undermine or outpace regulatory frameworks? This is one line of argument that the AI revolution is happening so fast and so quickly permeating every aspect of human life including education, policymaking, communication and social life, it is difficult to have rules to regulate it. It is a kind of gold rush or a kind of exploration of the wild west which takes place in a realm where laws, rules and regulations do not work much. It is all about who is outpacing whom or who is outmaneuvering whom? Already AI has made inroads in the Ukraine conflict and deep fake leaders are saying something different from the stated policy of their countries. The states are alerting their establishment, particularly financial and defense establishments, to increasingly use AI to bolster their capabilities to outsmart their rivals and enemies. This is not perhaps out of context to argue that the nation states are vying with each other and using AI to outmaneuver each other, even while not knowing exactly if the use of AI is helpful in the long run.

Another dimension of superintelligence needs to be discussed. We talk about human ego and range of its actions, and how it plays a role in human affairs. Would the superintelligence of machines have superego, like human ego, and project itself and its ego driven desires and ambitions on dominated things and beings? We do not know. It would perhaps be useful from a psychological and sociological point of view to explore how this superintelligence, if it ever happens, is going to affect the human race. Or, how is it going to address issues like climate change, global poverty, arms race, wars, nuclear weapons, and overpopulation on earth? To stretch our imagination, would AI powered machines have babies, or would they go to parties, marry, divorce, and engage in other human-like activities? Or would they be totally different, beyond our present calculations and imagination?

I think at some place the Indian thinker Jiddu Krishnamurti anticipated this AI revolution and poetically averred that machines cannot gaze at stars in a night sky, implying that machines do not have creativity and imagination as we humans have? Would AI powered machines prove Krishnamurti wrong? Would they also imagine like humans, or perhaps imagine and create better than the humans? Stretching imagination, and perhaps indulging in exaggeration, can we say that AI machines would be the next creations of God, or the next evolution in the march of Nature towards its continuous and infinite progress towards a destination, which we humans do not know.

I believe that human beings can work together, rise above their narrow constructions, and think collectively and creatively to checkmate the mad rush for developing AI technologies. Perhaps a hard law, purely based on fear and punishment, would not work. That law should and must be supplemented by something, to use Kantian terms, influenced by the concerns of human dignity and self-worth, and adding to that a moral dimension that factors the whole collective human life as an absolute, not to be used and abused. It is true that if superintelligent machines rule humans, humans would be responsible for that fate. It is also true if AI is creatively employed for the benefit of mankind, humans would be responsible for that as well. The choice is before the humans and their leaders.